Strong AI Is A Theoretical Impossibility

Strong AI Is A Theoretical Impossibility

Thesis:

Part I: It has been proven that the human mind cannot be analogous to an electronic (or any other type of) computer, and the functioning of an intellective mind cannot be reproduced (though it can certainly be simulated) by any type of mechanical device, including modern artificial intelligence systems.

Part II: It is further impossible that the human mind is a purely material thing (including some “emergent property” of matter).

—-

Part I: Gödel, Lucas, & Penrose

In 1931, the young mathematician Kurt Gödel published his First Incompleteness Theorem:

"Any consistent formal system F within which a certain amount of elementary arithmetic can be carried out is incomplete; i.e., there are statements of the language of F which can neither be proved nor disproved in F."

The theorem is highly relevant in itself - it is considered one of the greatest intellectual achievements in history, and netted its author the Albert Einstein Award.

The brilliant mathematician and physicist Roger Penrose called it, "a fundamental contribution to the foundation of mathematics - probably the most fundamental ever to be found."

He then adds, "...I shall be arguing that in establishing his theorem, he also initiated a major step forward in the philosophy of mind.”

On that note, what is relevant to our discussion here is that others, namely, first, philosopher John Lucas, then, subsequently, Penrose, have used the theorem to assert (I would say, to demonstrate), that the human mind is not, and cannot be - contrary to a common modern assumption - in any way analogous to a computer or any other formal system in its operations.

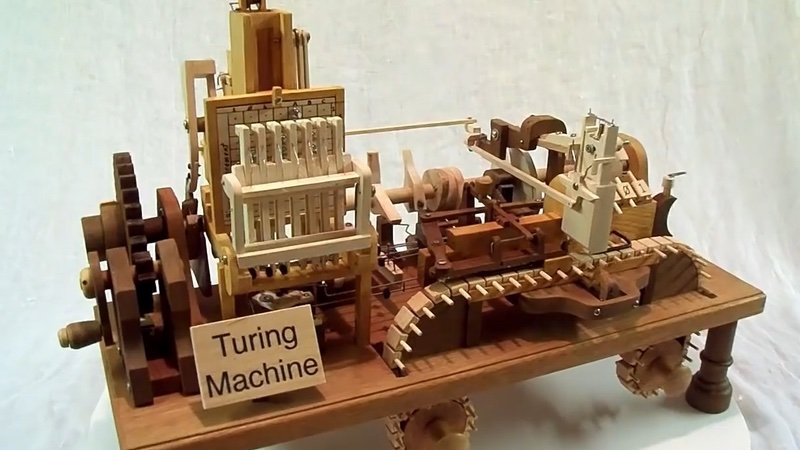

(Incidentally, Gödel’s theorems are closely related to the infamous Turing Halting Problem, which was proved about five years later, and around which Turing developed his now-ubiquitous theoretical model of computing.)

Gödel’s First Incompleteness Theorem: The Bad News

Gödel’s Incompleteness Theorems (they are closely related, with the second following from the first directly) are not trivial to comprehend.

A bit like the definition of monad, which is basic once the surrounding context is grokked (no pun intended), Gödel’s theorem is clear to the intellect once it has climbed the necessary scaffolding. But this involves a good deal of effort.

Write Nagel & Newman, in their 1958 explication of the proof,

“Gödel’s paper is difficult. Forty-six preliminary definitions, together with several important preliminary propositions, must be mastered before the main results are reached.”

The slight modification to the proof presented in that book is the best presentation of the proof (all necessary details) I’ve seen that is both complete and relatively accessible.

Gödel’s First Incompleteness Theorem: The Good News

It’s necessary to understand what the theorem proves, and the implications thereof, but it is not necessary to understand the details of the proof itself to weigh the merit of assertions built atop it. That’s because the theorem is regarded with the highest degree of certainty by the mathematical community. Few proofs of any kind in history have been subject to as much rigor. Thus, it can simply be taken as axiomatic.

It is the implications of the Incompleteness Theorem where the interesting discussion takes place.

Gödel’s First Incompleteness Theorem: Consistency Subtopic

A formal system is, of course, essentially a "computation or an algorithm” (Penrose). That somewhat loose, intuitive understanding is sufficient to grasp the arguments put forth here.

This succinct definition works too:

"A formal system is an abstract structure used for inferring theorems from axioms according to a set of rules. These rules, which are used for carrying out the inference of theorems from axioms, are the logical calculus of the formal system. A formal system is essentially an axiomatic system.'"

The topic of consistency as it applies to formal systems (referenced in the theorem) is substantial in itself, and beyond the scope of this paper. We will use this working definition:

A system is called inconsistent if it is possible, using its rules, both to prove some proposition and to prove its contrary. Otherwise it is consistent.

It is important to know that in an inconsistent formal system, all contradictory statements can be proven. The term means something rather different than the informal connotation.

An illustration: If we have an inconsistent formal system, starting with the rule that 1 == 0, we can prove, say, 13 == 7:

(1 == 0) * 6 == (6 == 0) + 7 == (13 == 7).

(The very reason that inconsistent systems are not bound by Gödel’s First Incompleteness Theorem is that they can be used to “prove” anything.)

Gödel’s First Incompleteness Theorem in a Nutshell

With that, we’ll take a quick look at the meat of the proof - but feel free to skip this section.

The aforementioned explication by Nagel & Newman is available online. I would encourage the technically-minded reader to give it a couple hours of study, because it is quite fascinating.

(Gödel’s genius was expressed in the way he invented to map formal statements to simple integers in a deterministically unique fashion, via prime factorials. It is a bit astounding that such a system works, and can be used to prove something so fundamental.)

—

Because even the Nagel/Newman explication is substantial, I want to offer one more very succinct version of Gödel’s proof, distilled from what physicist Stephen Barr discusses at length in an appendix.

The sketch-proof below relies on the stronger assumption that F, a formal system, is both consistent and sound, meaning that its theorems are true:

F is a formal system that is consistent and sound

There exists a statement, G, which says “This statement is not provable in F” (that is, using its axioms and rules)

Note that G is self-referential - that’s the mind-twisting bit

Now assume that G actually is provable in F (this assumption will be shown to be false below - this is a proof by contradiction)

Since F is sound, anything proved in F is true - so we know G is true if the assumption is true

So it must be really true that G is not provable in F (since F is sound and that is what G asserts) - if the assumption holds

But now we have a contradiction: We assumed G is provable in F, but G itself says it isn’t - and G must be true

Thus, our assumption was false and G is actually not provable in F

Because what we’ve proved (that G is not provable in F) is what G asserts itself, we have also shown that G is true

Thus we have shown that G is true and cannot be proven within the formal system F

In other words, if F is a consistent (and sound for the purposes of this approximation) formal system, it cannot prove G. But we can see G to be true. Thus we have shown that there are true statements that cannot be proved by F.

(The “approximation” of assuming not just consistency but soundness makes this version of the proof simpler.

But, of course, this is insufficient; it lacks formalized rigor. For that one must turn to Nagel & Newman or Gödel’s original version.)

—-

That’s it. It’s a bit of a mind-bender at first, but once you get it, you see that it does demonstrate that no consistent formal system is complete; there is always some truth - that we can prove - that is outside of F.

(To put things another way, F cannot “know” this. To do so, it would, to put it casually, have to have the ability to “see outside” itself - and formal systems can’t do that. This is what the proof shows.)

—-

Gödel’s proofs apply to computer programs, which are formal systems also. For any program P that is consistent, one can find a true statement, G(P), that cannot be proven or disproven by it.

This should be obvious to any programmer. You wrote the software - you know how it works, what it “does” or at least what it can do (via training).

If you’ve written a program to perform addition, for example, you know it is trivially easy to find some true statement - say, regarding multiplication - that the program cannot demonstrate.

(Note that while we may have imperative, sequential-style programs in mind, these things apply equally well to neural nets, which, after training, are simply deterministic or stochastic mappings of input to output.)

The Lucas-Penrose Argument

In 1961, John R. Lucas, a philosopher at Oxford University, produced a paper, based on Gödel's Theorem, arguing that the human mind cannot be analogous to a computer program. He wrote (emphasis mine),

“Gödel's theorem seems to me to prove that Mechanism is false, that is, that minds cannot be explained as machines. So also has it seemed to many other people: almost every mathematical logician I have put the matter to has confessed to similar thoughts, but has felt reluctant to commit himself definitely until he could see the whole argument set out, with all objections fully stated and properly met. This I attempt to do.”

(Gödel did not believe that the human mind could be explained entirely in material terms either - he called that idea “a prejudice of our times.”)

The Oxford physicist Roger Penrose expanded upon Lucas’ arguments in two books, The Emperor’s New Mind and Shadows of the Mind.

Gödel proved that if one knew the rules of a formal system - knew the program that a computer uses - one can always in a certain sense “outwit the system (computer).” The Lucas-Penrose Argument merely takes this one step further, demonstrating that if we were computers, or directly analogous to computers/formal systems of any type, we would be able to “outwit” ourselves - which is blatantly nonsensical.

[Objections are to be dealt with in a subsequent piece, but one I will speak to now: “We might in fact be ‘computers’ that simply don’t know the rules of the formal system.”

Such an objection is natural, and, incidentally, about the only one that does not involve denying the ability of the human mind to reason properly. The best answer is this: Though we can't take apart our brains and figure out how they work, if our minds are running a program, that program could be run on any type of computer - including one we build and completely understand. That is all that matters.]

The Internet Encyclopedia of Philosophy contains a succinct reproduction of the last major version of Penrose’s argument; I have replaced F with H below for clarity vis-a-vis Gödel:

(1) suppose that “my reasoning powers are captured by some formal system H,” and, given this assumption, “consider the class of statements I can know to be true.”

(2) Since I know that I am sound, H is sound, and so is H’, which is simply H plus the assumption (made in (1)) that I am H (incidentally, a sound formal system is one in which only valid arguments can be proven). But then

(3) “I know that G(H’) is true, where this is the Gödel sentence of the system H’” (ibid). However,

(4) Gödel’s first incompleteness theorem shows that H’ could not see that the Gödel sentence is true. Further, we can infer that

(5) I am H’ (since H’ is merely H plus the assumption made in (1) that I am H), and we can also infer that I can see the truth of the Gödel sentence (and therefore given that we are H’, H’ can see the truth of the Gödel sentence). That is,

(6) we have reached a contradiction (H’ can both see the truth of the Gödel sentence and cannot see the truth of the Gödel sentence). Therefore,

(7) our initial assumption must be false, that is, H, or any formal system whatsoever, cannot capture my reasoning powers.

QED

That’s Penrose. Lucas was the first to take the brilliant “next step” beyond Gödel’s theorem and simply assume H to be a human mind, facilitating the proof by contradiction that H cannot actually be a formal system.

Below is the original Lucas argument, summarized from Barr (ibid), with my comments interspersed. I find this version of the proof somewhat more clear:

“First, imagine that someone shows me a computer program, P, that has built into it the ability to do simple arithmetic and logic. And imagine that I know this program to be consistent in its operations, and that I know all the rules by which it operates.”

Any programmer would know these things about his own program.

“Then, as proven by Gödel, I can find a statement in arithmetic that the program P cannot prove (or disprove) but which I, following Gödel's reasoning, can show to be a true statement of arithmetic. Call this statement G(P). This means that I have done something that that computer program cannot do. I can show that G(P) is a true statement, whereas the program P cannot do so using the rules built into it.“

- Again, it is obvious to any programmer that his program cannot prove a statement it doesn’t know about.

“Now, so far, this is no big deal. A programmer could easily add a few things to the program—more axioms or more rules of inference—so that in its modified form it can prove G(P). (The easiest thing to do would be simply to add G(P) itself to the program as a new axiom.) Let us call the new and improved program P'. Now P' is able to prove the statement G(P), just as I can.“

“At this point, however, we are dealing with a new and different program, P', and not the old P. Consequently, assuming I know that P' is still a consistent program, I can find a Gödel proposition for it. That is, I can find a statement, which we may call G(P'), that the program P' can neither prove nor disprove, but which I can show to be a true statement of arithmetic. So, I am again ahead of the game.

However… the programmer could add something to P' so that it too could prove the statement G(P'). By doing so he creates a newer and more improved program, which we may call P". This race could be continued forever. I can keep 'outwitting' the programs, but the programmer can just keep improving the programs. Neither I nor the programs will ever win. So, we have not proven anything. But here is where Lucas takes his brilliant step. Suppose, he says, that I myself am a computer program. That is, suppose that when I prove things there is just some computer program being run in my brain. Call that program H, for 'human.' And now suppose that I am shown that program. That is, suppose that I somehow learn in complete detail how H, the program that is me, is put together. Then, assuming that I know H to be a consistent program, I can construct a statement in arithmetic, call it G(H), that cannot be proven or disproven by H, but that I, using Gödel's reasoning, can show to be true.

But this means that we have been led to a blatant contradiction. It is impossible for H to be unable to prove a result that I am able to prove, because H is, by assumption, me. I cannot 'outwit' myself in the sense of being able to prove something that I cannot prove. If we have been led to a contradiction, then somewhere we made a false assumption. So let us look at the assumptions. There were four: (a) I am a computer program, insofar as my doing of mathematics is concerned; (b) I know that program is consistent; (c) I can learn the structure of that program in complete detail; and (d) I have the ability to go through the steps of constructing the Gödel proposition of that program. If we can show that assumptions b, c, and d are valid, then we will have shown that a must be false. That is, we will have shown that I am not merely a computer program.”

QED

I assert that this simple logical chain (resting as it does on Gödel) should be intuitively obvious and recognized as accurate by any software engineer.

In addition, we will see, subsequently, that none of the the assumptions b, c, and d can be refuted without effectively throwing out the mind as capable of knowing anything at all (including that the Lucas-Penrose argument is false!).

Part II: The Mind Cannot Be Material

Before countering objections to the Lucas-Penrose Argument, it will be helpful to closely examine what is essentially the counter notion - that minds are formal systems, and thus either material things or capable of being replicated by material things, and see where that brings us.

Philosophical Materialism holds that “matter is the fundamental substance in nature, and that all things, including mental states and consciousness, are results of material interactions of material things.”

We are going to argue herein that materialism produces a model of the mind that cannot account for its observable properties, that results in various contradictions and absurdities, and that no one, in practice, behaves as if they believe.

As a prelude to that, here is a trivial logical proof that Materialism is false:

1. Materialism says that only matter exists

2. Abstract concepts are not matter

3. Abstract concepts exist

4. Materialism is false

Abstractions

Abstractions, also known as universals, such as geometric truths, mathematical truths (the constant pi, to take one common example) and much more, are objective and immaterial in nature.

To be sure, a given person's understanding of pi may fall short of its actual nature, but its nature certainly exists all the same: It is the ratio of a circle's circumference to its diameter. This abstraction exists apart from time and space, has no matter, and is not dependent in any way upon matter (the concept exists no matter how many physical circles do).

What is pi “made of”? Certainly not matter. In fact, it is not “made of” - as in “composed of” - anything. It is elementary and cannot be reduced into anything smaller; it is atomic (indivisible) as are all abstractions.

Furthermore, pi does not exist in a mathematical vacuum, but has relationships to other (very complex and non-obvious) truths, such as:

Prime numbers have their own taxonomy. Twin primes are two apart. Cousin primes are four apart. And sexy primes are six apart, six being sex in Latin… I know that an absolute prime is prime regardless of how it is arranged: 199, 919, 991. Palindromic primes are the same forward and backward—133020331. Tetradic primes are palindromic primes that are also prime backward and when seen in a mirror, such as 11, 101, and 1881881. A beastly prime contains 666. 700666007 is a beastly palindromic prime. A depression prime is a palindromic prime whose interior numbers are the same and smaller than the numbers on the ends: 75557, for example. Conversely, plateau primes have interior numbers that are the same and larger than the numbers on the ends, such as 1777771. Invertible primes can be turned upside down and rotated: 109 becomes 601. The only even prime number is 2. Since all other primes are odd, the interval between any two successive primes has to be even, but no one knows a rule to govern this.

(We do not invent mathematical reality - we discover it.)

Nominalists assert that abstractions do not really exist – only individuals’ approximations or conceptions of them are real. But note that immediately the nominalist is appealing to universals: To suggest that some particular can be compared to some standard or ideal presupposes that the latter exists. If we examine 1,000 imperfect circles together with 1,000 individuals who all (somehow, quite implausibly) cannot grasp the concept of circle, we can still judge these circles and conceptions of circularity by the measure of true circularity. If even one mind understands circularity, it exists.

We must then ask how universals (abstractions) would exist in a purely physical “mind.” How could it be possible for matter – which is finite in time & space – to “hold” conceptions that have no such boundaries (in fact, are entirely immaterial)? How can circularity – which encompasses all circular objects, meaning an infinite number of them – be entirely contained by a pattern of neurons which is naught but gooey matter?

That is only the beginning of the problems, however. If we say that circularity or pi are represented by the state of a set of neurons, how is it possible that these concepts are represented exactly the same way in different minds (brains) - or even in the same way in the same brain at two different points?

The state of our bodies, including our brains, is constantly changing, and no two are even close to identical.

Note that the brain holding an image or symbol that refers to an abstraction is not the same thing. It is possible to see & recognize a circle - software can do this - without understanding circularity. Circularity is not just an aspect of some material things, but an abstract concept that exists apart from any physical instantiations - so are all mathematical truths and all concepts in the realms of logic, morality, and more.

How would the concept of circularity know how to express itself in the form of a pattern of neurons?

For these reasons and others, the notion of matter “thinking” has traditionally been regarded as absurd.

—-

It’s also worth noting that it’s not clear that the power of understanding is related at all to physical complexity. Example: 1 + 1 = 2. To comprehend this simple mathematical truth, one needs to understand three things: the number one, equality, and the operation of addition. All of these things are almost perfectly simple. There is really nothing at all to suggest that such comprehension is possible with a very large number of neurons but not some smaller number - nothing at all except the assumption that intellect is something that somehow “emerges” from matter at some sufficient level of complexity.

Logic, Knowledge, Certainty, Truth

“You are nothing but a pack of neurons,” goes the saying from one famous Materialist. Let’s take a look now at what this would mean for something as basic as the laws of logic, and as important as knowledge itself.

The short version: If this is true, we aren’t capable of knowing it. We aren’t capable of knowing anything, actually, of being able to ascertain truth from falsehood with anything better than random probability.

That is because, if our minds are neurons (rather than something that makes use of them), every thought, belief, etc., is the result of physical and chemical process, and any relationship they might have to mathematical or other truth would be - coincidental.

What would the thinking process really be, in such a model? There is either no connection between thoughts, which are just successions of neural states, or any connection is based somehow on physical or chemical properties.

Here is a classic, trivially simple deductive argument:

- Socrates is a man.

- All men are mortal.

- Therefore, Socrates is mortal.

We will examine how this looks in the materialist model of the mind (as discussed by Feser and many others).

In this model, mental states represent symbols which become encoded in the brain by causal connections to the real world. If objects of type A regularly cause brain events of type B, then B will come to represent A.

One serious problem with that theory is that there is really no way to “tag” such symbols with causal events except by some sort of special pleading. For example, will seeing a cat - A - “impress” upon the brain the concept of cat, per se (an abstraction!), or will it be rather the cat’s fur, the reaction of the light reflected from the cat on the optic nerve, the reflexive action of petting the cat, or any one of many events in a complex causal chain? In reality, it takes an interpreting mind to choose the beginning or end of a causal chain and make that the definitive event. The materialist models of mind do not meaningfully address this or the model’s other problems.

But it gets worse still. In this model, symbols are electrochemical states and thus only electrochemical properties generate further symbols - that’s the whole point of the model, which is a best-shot at explaining thinking in purely material terms.

But if thoughts are symbols, which are electrochemical brain states, then a logical progression from one thought to another, such as occurs in deductive reasoning (and all logical thought of any kind), is not dependent upon the meaning of the symbols at all.

If

“Socrates is a man” actually meant (i.e., was interpreted as) “It’s raining in London”

And “All men are mortal” actually meant “Pigs can fly”

And “Therefore, Socrates is mortal” actually meant “Redheads can be testy”

that completely nonsensical conclusion could still follow from the premises in such a symbol-driven “mind.”

The point is that symbols, even by definition, have no intrinsic interpretation - they have only a meaning assigned to them by an intellect. Nothing counts as a symbol apart from some interpreter. Thus, reasoning purely in symbols - a formal system model of the mind - is almost inherently nonsensical.

The meaning of symbols is not relevant to a machine, but it is very relevant to actual thinking. A calculator does not “know” that “2,” “4,” and “+” are anything more than symbols, because it does not need to, because it’s already been programmed to produce “4” from “2 + 2.” It is not thinking - it’s had its thinking done for it a priori, by its creator.

Again and again one finds that attempts to explain the mind in purely material terms end up in this sort of vicious circle that actually explains nothing at all.

—-

The materialist now objects that this isn’t really implied, that actual consciousness somehow “emerges” from matter - and that that process is also the key to actual Strong AI.

The problem with it is twofold:

There’s absolutely no evidence for it, nor even the most tentative hypothesis describing such a thing.

It actually doesn’t get around any of the problems - if matter can’t contain abstractions, “emergent” matter can’t either. Whatever might magically “emerge” from matter cannot possess any properties that matter, per se, does not.

(To protest with, “Obviously, this can happen - it did with humans” is to beg the question, as in the logical fallacy of that name. Also: It simply can’t happen because it’s inherently nonsensical, as is explained above.)

Ridiculousness

It’s been said that to assert that thought is matter or is caused by matter is “as if one were to say that the number two could be heated by a Bunsen burner.”

That is the heart of the ridiculous nature of this hypothesis. There is a sort of impenetrable impedance mismatch here; thinking is not material; matter cannot think; and that is that.

Law-professor-turned-philosopher Phillip Johnson put it this way: “A theory that is the product of a mind can never adequately explain the mind that produced the theory. The story of the great scientific mind that discovers absolute truth is satisfying only so long as we accept the mind itself as a given. Once we try to explain the mind as a product of its own discoveries, we are in a hall of mirrors with no exit.”

What the materialist is really trying to do is to get rid of the mind, but he cannot do this without making reference to intellective powers in his very assertions. He sweeps the mind under the rug again and again as he simultaneously pulls the rug away; he is chasing his tail in a circle of madness.

Is the mathematical brilliance that is, say, tensor calculus (on which rests General Relativity) reducible to neurons firing? Again, such notions are absolutely nonsensical prime facie.

Yet the materialist has no other explanation accessible to him.

This is important stuff. Fortunately, as I’ll comment on a bit below, no one really takes the materialist mind model seriously in practice. If we did, everything would be at stake: All knowledge, all science, all mathematics, all communication, all human intellective activity and thus society itself, period.

As the biologist J.B.S. Haldane argued, “If materialism is true, it seems to me that we cannot know that it is true. If my opinions are the result of the chemical processes going on in my brain, they are determined by chemistry, not the laws of logic.”

(Haldane foolishly recanted that position at the advent of the computer, not understanding that they are simply machines.)

No One Behaves As If It’s True

Fortunately for society, no one really behaves as if the materialist model of the mind were true.

People know they understand, they know deductive logic is valid, they know mathematics is valid (anyone who can reason at all understands, at the least, that one plus one is two and it cannot be any other way), they know beliefs are not caused by chemistry, and they defend mathematical truth, moral beliefs, and similar things with vigor for these reasons.

Further, we know that our thoughts are free: We can, at will, turn our mind to any subject that we wish, instantly.

As British mathematician G.H. Hardy put it, “317 is a prime number, not because we think it so, or because our minds are shaped in one way rather than another, but because it is so, because mathematical reality is built that way.”

Such sentiment - which is, at bottom, nothing but human experience and common sense - is entirely antithetical to the notion of material “minds.”

Mind: Its Definition Characteristics

If we had to distill the “nature of mind” down to its most basic intrinsic properties, we would end up with these things:

Intentionality/Causality

Understanding/Certainty

Our minds exhibit intentionality - the ability to experience and direct purpose. Matter may have purpose too, but if it does, it’s an immutable property and not under ‘its’ control.

For example, matter imposes upon spacetime a curvature which we call gravity, but it cannot choose to do something else instead. Sodium and chorine react, chemically, trading electrons, to become salt, but anyone who asserted that, one day, they might choose to make water instead should be regarded with suspicion.

Not so the mind. We intend things. We plan things. We desire outcomes and construct events to, if possible, secure them. We direct our minds toward purposes outside them.

Secondly, we can comprehend, we can understand, we can know - with certainty.

There are truths that are contingent and truths that are “of necessity.” 317 being a prime number is in that second category. (If that’s too big of a stretch, consider 7 instead.) That prime numbers are so must be true; there is no universe that can exist that can violate this law, no matter what; it follows directly and undeniably from the nature of prime numbers.

Note also that freedom is a prerequisite of knowledge. The truth that 2 + 2 = 4 is something presented to our intellect that our wills are able to acknowledge or reject, freely. We see this truth, yet we can choose to deny it.

Again, these properties, this aspect, is something inherently foreign to matter, in any configuration. No one can dispute this - they can only assert that, still, somehow, such things magically “emerge” from matter, creating something much greater and of an entirely different nature than its cause.

The Nature of Computers

With that notion of mind in mind, we can draw contrast with the properties of machines, including computers and their software.

Computers running software systems are machines, and thereby entirely deterministic (or with an intentional stochastic element), exactly like any other machine, though some are far more complex than most. (It is this complexity which masks the logical fallacy of the “thinking machine.”)

If computers “think”, or are capable of thinking, then so do electric pencil sharpeners, which perform actions based on input in exactly analogous fashion. Thinking in the sense of a true intellect involves understanding, not the rote performance of actions, which is what computers do; this is all they do (every software developer should understand this).

If one is inclined to accuse me of chasing straw men in the form of thinking pencil sharpeners, be aware that the man who invented the term artificial intelligence, John McCarthy, wrote that, “Machines as simple as thermostats can be said to have beliefs, and having beliefs seems to be a characteristic of most machines capable of problem solving performance.”

While people like McCarthy might pine for a day when they’ll be able to lose a moral debate to a super-intelligent coffee maker, some of us feel obligated to point out that such faulty premises belie a fundamental misunderstanding of the subject.

(And if you believe I’m criticizing a caricature of his position, you’re welcome to read the linked article and offer a challenge.)

—

I offered some bare assertions above regarding the capabilities and limitations of software systems. Let me explain them.

Computers (I will use this term interchangeably with software system, including neural nets) manipulate symbols, transforming input to output. This encompasses all they do.

This is true of all formal systems - it is a defining characteristic. But the pertinent point is this: Computers do the “mechanical parts” of “reasoning,” exactly that which does not involve nor require true understanding.

Again, any software engineer should understand this quite readily. You build the “intelligence” into your software - your program doesn’t do anything you have not spelled out algorithmically, down to the last detail.

As before, this is true also of neural nets, which many programmers do not have direct experience with. They are mappings of input->output, the results produced by training with data. They are either entirely deterministic or have a stochastic (pseudo-random) element engineered by the programmer.

Pseudo-randomness is not to be mistaken for individuality, nor for thinking.

Yet One More - Quantum Mechanics

There is actually another, third, entirely independent basis for treating the mind as non-material: Quantum Mechanics.

In the traditional, Copenhagen Interpretation, the mind must be some non-physical entity, entirely distinct from the material world.

As Wikipedia puts it, “The mind is postulated to be non-physical and the only true measurement apparatus.”

“The rules of quantum mechanics are correct but there is only one system which may be treated with quantum mechanics, namely the entire material world. There exist external observers which cannot be treated within quantum mechanics, namely human (and perhaps animal) minds, which perform measurements on the brain causing wave function collapse.

"Henry Stapp has argued for the concept as follows:

“From the point of view of the mathematics of quantum theory it makes no sense to treat a measuring device as intrinsically different from the collection of atomic constituents that make it up. A device is just another part of the physical universe... Moreover, the conscious thoughts of a human observer ought to be causally connected most directly and immediately to what is happening in his brain, not to what is happening out at some measuring device... Our bodies and brains thus become ... parts of the quantum mechanically described physical universe. Treating the entire physical universe in this unified way provides a conceptually simple and logically coherent theoretical foundation...”

Though “fascinating” isn’t a strong enough description for this theory (along with quantum mechanics in general), I consider this argument less forceful than the others, because there do seem to be viable alternative explanations; this is science.

Conclusions

This paper has demonstrated that the notion of so-called Strong AI, of legitimately “thinking” machines, is an oxymoron, even an absurdity - despite the zeitgeist.

In support of this I have offered two quite independent bodies of evidence, the Lucas-Penrose argument based on Gödel’s Incompleteness Theorem, and the contradictions and nonsensical conclusions that result from the model of a material mind.

These two completely independent lines of reasoning create a whole greater than the sum of their parts. Rather than “Conscious AI” being assumed as inevitable, it should be seen as something beyond even “unlikely.” (I vote for “impossible.)

In a follow-up, I will explore the criticisms of the Lucas-Penrose argument. Here is the spoiler: There are no criticisms that are not based either on denials that the human mind knows what it knows or that it is consistent. (Keep in mind that it is not colloquial “consistency” we’re talking about, but the very low bar of not being entirely inconsistent, affirming every possible logical contradiction.)

To save the notion of material minds, these critics give up all confidence in the mind itself, including their firm convictions that material minds are possible.

It is an argument that saws off the branch it rests on.

—-

However, it should be noted that this theoretical topic has almost no bearing on the real-world power and applicability of AI systems. AI is a tool that is just coming into its own in terms of the tapping of its intrinsic potential, and AI systems are most certainly going to have a powerful impact on society on multiple levels.

AI systems are machines. They are powerful because silicon microprocessors can be run at enormously high clock speeds (billions of cycles per second), because they can be used in parallel, and because computer storage systems can make available enormous quantities of information. But, most important of all, they are powerful because people have created the neural net software that translates input to output at these speeds and with access to this information.

In a sense, ChatGPT knows more than you do. But, in a more important sense, it “knows” nothing at all, never will, and also doesn’t know what it doesn’t know. That is why it can be so “smart” yet still express “logic” of this sort: